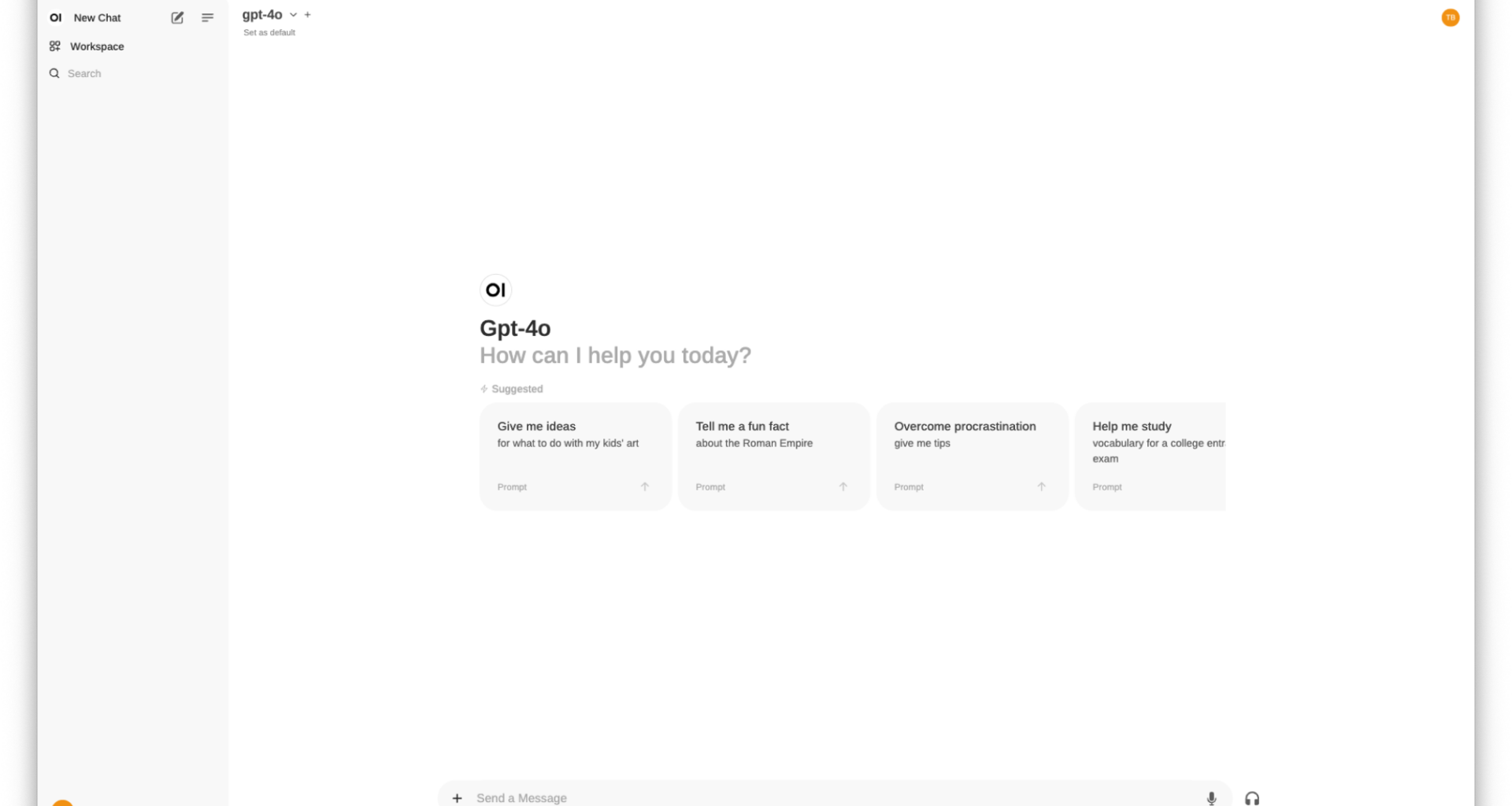

Open WebUI is an elegant, extensible, and feature-rich self-hosted WebUI designed to function entirely offline. Supporting various LLM runners like Ollama and OpenAI-compatible APIs, it offers unparalleled convenience and security.

Key Highlights:

User Roles and Privacy

- Admin Creation: The first account created on Open WebUI automatically gains Administrator privileges, allowing complete control over user management and system settings.

- User Registrations: Subsequent sign-ups start in Pending status and require Administrator approval for access.

- Privacy and Data Security: All your data, including login details, are stored locally on your device. Open WebUI ensures strict confidentiality and does not make any external requests, enhancing your privacy and security.

Quick Start with Docker 🐳 (Recommended)

Important Tips:

- Disabling Login for Single User: Set

WEBUI_AUTHtoFalseto bypass the login page for a single-user setup. Note that switching between single-user mode and multi-account mode is not possible after this change. - Data Storage in Docker: When using Docker, include

-v open-webui:/app/backend/datain your command to ensure your database is properly mounted, preventing any data loss. You can replace the volume name with an absolute path on your host machine to link container data to a folder on your computer using a bind mount. Example: change-v open-webui:/app/backend/datato-v /path/to/folder:/app/backend/data.

Installation with Default Configuration

For Ollama on Your Computer:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainFor Ollama on a Different Server:

To connect to Ollama on another server, change the OLLAMA_BASE_URL to the server’s URL:

docker run -d -p 3000:8080 -e OLLAMA_BASE_URL=https://example.com -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainTo Run Open WebUI with Nvidia GPU Support:

docker run -d -p 3000:8080 --gpus all --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:cudaInstallation for OpenAI API Usage Only

If you’re only using the OpenAI API, use this command:

docker run -d -p 3000:8080 -e OPENAI_API_KEY=your_secret_key -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainInstalling Open WebUI with Bundled Ollama Support

This installation method uses a single container image that bundles Open WebUI with Ollama, allowing for a streamlined setup via a single command.

With GPU Support:

docker run -d -p 3000:8080 --gpus=all -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollamaFor CPU Only:

docker run -d -p 3000:8080 -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollamaThese commands provide a seamless and efficient installation process for both Open WebUI and Ollama, ensuring you can get started swiftly and easily.

After installation, you can access Open WebUI at http://localhost:3000. Enjoy the power and flexibility of your new self-hosted WebUI!